GenAI Video & Audio: Tools, Tradeoffs & Lessons

It started with a 90-day challenge: make a GenAI-powered video promoting the 2025 CMO Super Huddle using only off-the-shelf tools. What followed was equal parts ambition, frustration, learning, and editing. Along the way, Drew got a crash course in prompt writing, script timing, voice cloning, and the realities of working inside tools that promise automation but still require a certain level of finesse.

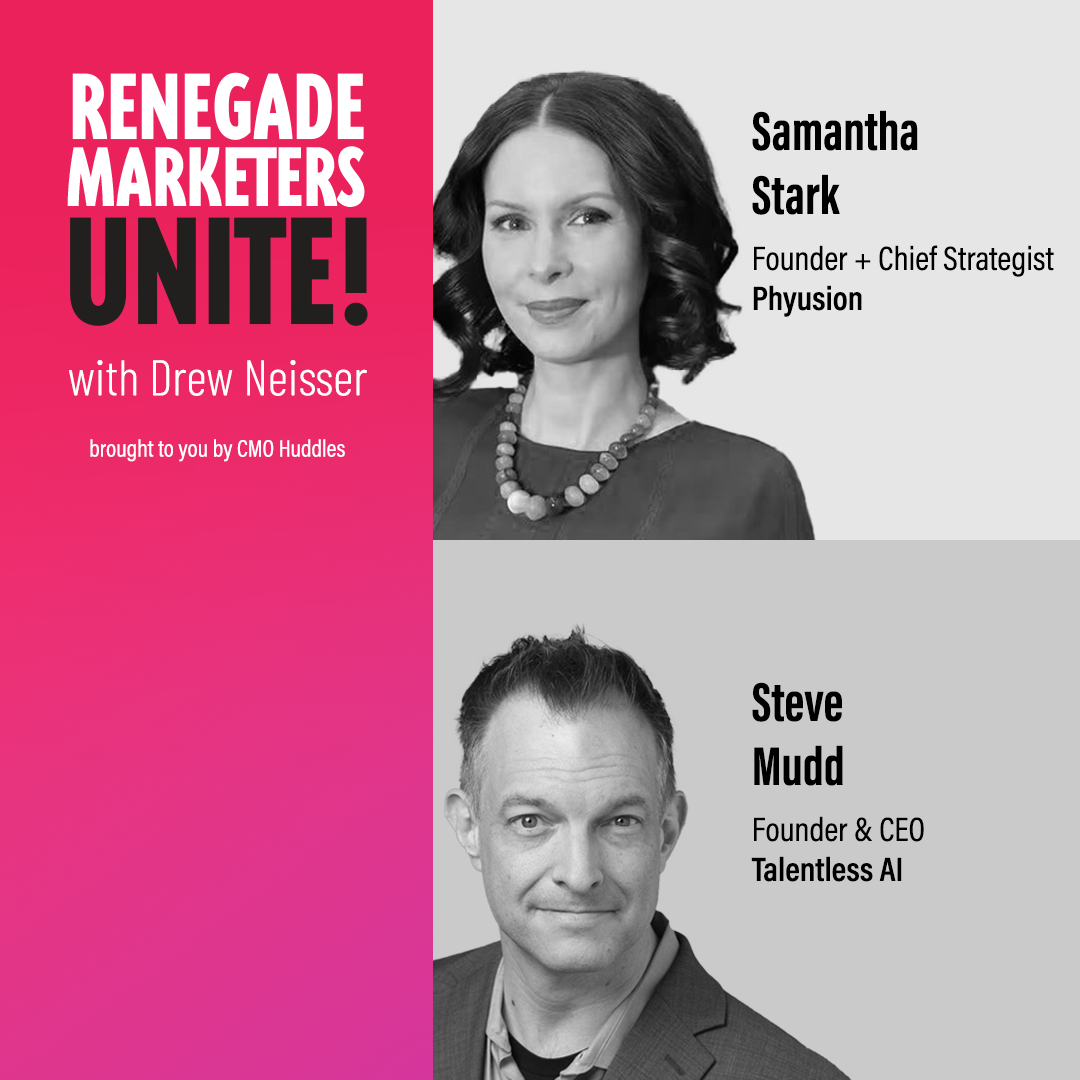

With GenAI coach Samantha Stark of Phyusion guiding the early stages and Steve Mudd of Talentless AI stepping in for post, the project quickly became a real test of creative endurance. Each step surfaced a new set of tradeoffs. The tools were powerful, but stitching them together was anything but seamless. What came out the other side is something Drew’s proud to share, along with lessons from two expert AI collaborators and a few fun reveals they brought to the table that show just how weird, clever, and unexpected GenAI production can get.

In this episode:

- Samantha shares how GenAI tools spark ideas but still need human direction to shape tone and story

- Steve explains how editing brings structure and emotion to GenAI content for a more watchable result

- Both guests highlight the importance of adding context to make GenAI output resonate with viewers

Plus:

- What GenAI tools need from you upfront to deliver useful output

- How multi-tool workflows impact timing, syncing, and storytelling

- Where to focus your time during GenAI production for the biggest payoff

- When expert editors can step in to shape flow, tone, and polish

Tune in for a behind-the-scenes look at GenAI video and audio creation, guided by the experts who know how to make it all come together.

Renegade Marketers Unite, Episode 469 on YouTube

Resources Mentioned

- Tools mentioned

-

- Video Generation: Veo 3, LTX Studio, ImagineArt, Runway ML, Sora, Synthesia

-

- Image Generation & Asset Creation: Midjourney

-

- Voice & Audio Generation: ElevenLabs, Suno, HeyGen

Highlights

- [4:12] Samantha Stark: How Phyusion powers AI adoption

- [7:09] Your AI needs a creative brief

- [9:53] Know your AI’s limits

- [12:18] The charm (and chaos) of ImagineArt

- [15:30] No silver bullet for gen video

- [18:39] AI stitched Samantha's holiday video

- [21:35] Making the CMO Super Huddle 2025 video

- [25:14] Steve Mudd: Why I started Talentless AI

- [26:35] Steve Mudd saves the EU (with cats)

- [30:57] From prompt to production

- [33:05] The final cut: CMO Super Huddle 2025

- [35:14] The future is hybrid

- [37:35] Watch this image start moving

- [39:27] Clone yourself to save time

- [42:42] Time to call in the pros

- [47:51] The creative renaissance is here

Highlighted Quotes

“Prompt engineering to me is very similar to data science—garbage in, garbage out. So as a senior communicator, when you're prompting for video generation, think about briefing your creative team.”— Samantha Stark, Phyusion

"The bad news with the good news of democratization is your content has to be really great, because you know what? It's getting really easy to create really great content. So the bar goes up."— Samantha Stark, Phyusion

"To me it's the beginning of a creative renaissance. You're seeing this initial phase of, oh, it's slop and it's weird. And yes, all of that is true, but this is also perhaps the most interesting and complicated art form we've ever created as humans."— Steve Mudd, Talentless AI

"This will be the future of creativity. The nice thing is, it is democratized in a lot of ways. Stock footage to me looks so bad now—it's almost like it raises the expectations."— Steve Mudd, Talentless AI

Full Transcript: Drew Neisser in conversation with Samantha Stark & Steve Mudd

Drew: Hello, Renegade Marketers! If this is your first time listening, welcome, and if you're a regular listener, welcome back. Before I present today's episode, I am beyond thrilled to announce that our second in-person CMO Super Huddle is happening November 6th and 7th, 2025. In Palo Alto last year, we brought together 101 marketing leaders for a day of sharing, caring, and daring each other to greatness, and we're doing it again! Same venue, same energy, same ambition to challenge convention, with an added half-day strategy lab exclusively for marketing leaders. We're also excited to have TrustRadius and Boomerang as founding sponsors for this event. Early Bird tickets are now available at cmohuddles.com. You can even see a video there of what we did last year. Grab yours before they're gone. I promise you we will sell out, and it's going to be flocking awesomer!

You're about to listen to a Bonus Huddle where experts share their insights into the topics of critical importance to our flocking awesome community. CMO Huddles. In this episode, Samantha Stark, who is a Gen AI coaching consultant, and Steve Mudd, who is a Gen AI video producer, share the process that the three of us went through to create a flocking awesome video about the CMO Super Huddle. I got to learn how to use these tools, and you'll get an understanding of all the tools that are out there, the amazing possibilities that you can do to create voiceovers, visual generation, and how ultimately, this is going to allow you to do better video faster, and just generally, embrace a medium that seemed to be out of reach. If you like what you hear, please subscribe to the podcast and leave a review. You'll be supporting our quest to be the number one B2B marketing podcast. All right, let's dive in.

Narrator: Welcome to Renegade Marketers Unite, possibly the best weekly podcast for CMOs and everyone else looking for innovative ways to transform their brand, drive demand, and just plain cut through, proving that B2B does not mean boring to business. Here's your host and Chief Marketing Renegade, Drew Neisser.

Drew: Hello, huddlers. Exactly three months ago, I had my first call with Samantha Stark, who we know as Sam, about generative AI video. Sam had come highly recommended from a fellow huddler, and as I was determined to tap into her expertise, we agreed on a call that she would coach me through the production of a video using generative AI from start to finish. I think if I knew then what I know now, I never would have agreed to this project. I'm not sure Sam would have either. But anyway, I figured, what better way to demonstrate the possibilities and pitfalls of a wide range of video and audio tools than to try to create one? So we also set a deadline for ourselves, booking this bonus huddle exactly 90 days out from the call with nothing but a topic in mind for the video. And the topic, of course, was the CMO Super Huddle. So about eight weeks into it, I told Sam that I had reached my level of incompetence, having created a video that was nowhere near flocking awesome. In fact, I'm fairly certain a seventh grader could have done better, and that was big lesson number one: if you want to create a professional-looking video longer than a few seconds, you'll want to work with professionals. That's when we called in the cavalry. The cavalry, pardon me, in the form of Steve Mudd. And thanks to Steve and his associates, we now have something I can't wait to share with y'all. But before we show you that, let's welcome our special guest, starting with Sam Stark of Phyusion. Hello, Sam.

Samantha: Hi everyone.

Drew: How are you and where are you this fine day?

Samantha: Oh, I am very good. I am in Brooklyn, New York, hiding from my new puppy in the other room. So that's why I'm in a dark little room here, but they're in the back room.

Drew: New puppy, all right. Well, I can't wait to see that puppy animated in video with its little mouth moving.

Samantha: That's actually already happened.

Drew: Oh, there it is. Okay, see, we did not rehearse that. So let's talk about a little bit about what you do and how generative AI generative video fits into your consulting practice.

Samantha: Sure. So Phyusion is known as really innovation within communications. And of course, right now within marketing communications, there's nothing more important to changing how we work than generative AI. So we're very much focused in on strategy level and adoption and support for your team. So we work with big enterprises, as well as some mid-sized companies, both B2B and B2C, on taking a look at based upon the content you're putting out into the world, based upon the tech stack that you're currently on. The full piece of it, from setting your KPIs and your strategy to choosing your tools, to reforming your basically, your workflows and going through a process, to retraining your team. So these are really soup to nuts. And so some great clients, like New York Life, I've worked with Citizens Bank, and so I think it's those clients that have big content needs are where we really lean in. And so everything from like I said, what tools should you be on? Should you create agents and what's going to really work for you? Because every situation is honestly different in terms of adoption.

Drew: And we talked a lot about tools along the way. So it's great, we're gonna and we'll share some of those, but you specifically, kind of, you've leaned into Gen AI video quite a bit. Talk a little bit about that, and how you sort of came to discover this. Pretty cool.

Samantha: Well, my journey has been a little bit different, in the sense of, I really wanted to learn myself, so the process that we went through, Drew, with you, is similar to what I did to myself, and actually it's similar to what I do for every single tool that I learn. Give yourself a very meaty project to really dig in deeply. So this past holiday, back in actually, November 2024 is when I decided I would create the Phyusion holiday card, from soup to nuts using generative AI. So starting from doing my strategy within a custom AI workspace within Claude, into fully generating the music, doing the editing, choosing different video models for different means, in terms of animation, but that's actually how I approach everything. Is like, use the tools for your business first, and now we from a content creation standpoint. So for example, we work with a company called Outdoorsy. A lot of people think of them as Airbnb for RVs. But looking at how did they create short-form content based upon their tech stack, and how do they go to market, even with Facebook advertising and things like that, and for them, let's hold like Google's video FX makes sense, because their entire tech stack is built that way. And frankly, it's very powerful from that perspective too. But we're really in this moment of short-form video is great right now. Filling in the gaps is really great right now, but it's just going to get better, as we all know.

Drew: Yes, okay, so we started this project. I sort of think I can't remember if you told me to go to ChatGPT or I just did. But what was fun for me is I got to when you go into ChatGPT and you don't say, just write a video, you sort of work on this strategy with them and help do that. And I put in a lot of details before moving to the script. Can you talk a little bit about that phase, why it's important, and what kinds of things when you're starting a video project, you should include in your prompts?

Samantha: Yeah, for sure. And I would actually recommend not just using a public platform like that that doesn't understand your business for this type of strategic planning. So I use all of the various different LLMs, but for something like a video planning project, I'm going to be using Claude because I have built within Claude a bunch of customized AI workspaces that might be a customized GPT, that might be a project within Claude, that might be a Gem within Gemini for Google, but I essentially have a CMO project within Claude, where that little space that I have understands my target audience. It understands my business. It knows who I am. It understands so much detail about my messages, as well as even things like SEO keywords. So when I go in to sort of prompt and create my video, it already understands the goals of my business, so you're not just starting with a basic back and forth. Otherwise it's going to be very generic. So the framework for something like that, and when you're talking about prompt engineering, prompt engineering, to me, is very similar to data science, like garbage in, garbage out. So as a senior communicator, when you're prompting for a video generation, think about briefing your creative team. Frankly, you know, what would you tell a creative team or an agency? What are my goals for this video? What is my target audience for this video? How do I want to make them feel? What is and that, to me, is what's been very interesting in terms of, especially when you're generating clips, is actually the feel that you need to get is really important, those type of things, and then the aesthetic and your style. So truly, when you're spending your thinking at the upfront in the prompt, when you're starting the whole process. So it's a different workflow, which is what Gen AI is doing across the board, is creating new types of workflows that require creativity and strategy at different points.

Drew: For me, this was real. This was the fun part, because I really liked the way it would ask me questions as we were going back and forth, and help sort of hone down the strategy. Said, okay, I get it. And then we got to a script and a storyboard, and even prompts for the animation sequence. I love it when it does things like this. It gives us a storyboard, and then it says, hey, would you like the prompts for the animation sequences? And you go, yeah, that'd be cool. Thank you for that. So for those new to AI video production, what are the signs that you're sort of on the right track at the storyboard stage?

Samantha: Yeah, I would say there's a lot of things you need to understand about the technology. You absolutely need to understand the technology because, just frankly, it's going to tell you it can do these amazingly crazy things that it can't. So if you don't know how to vet that when you're in the process, then you're going to have some problems because you're going to fall in love with a concept that maybe isn't possible from that perspective. But really, what you need to look for when you're getting to that storyboard phase is to do a bit of—and I'm not talking about great storytelling, because all those classic things about what makes a great story, the story arc, the characters, the continuity, all of that I'm leaving as, you know, great storytelling is great storytelling, whether it's generative AI or not. But when you're actually doing the storyboarding within generative AI, you need to think about, you know, how much video can you generate at a time. That's an important consideration because some tools can generate two minutes of video. Some tools are going to generate eight seconds of video. You have these different levels. You have one video tool that has character continuity, meaning you can put your character into different video clips. And you have a bunch of tools that cannot. And then you have tools like Veo with Veo 3, which just came out, which is a massive game changer that also has speech and sound—everything is integrated into that one clip. So you've had big companies already put out ads just using Veo 3. I think I saw it was the NBA finals or something along those lines, but that cost $2,000 to make.

Drew: We'll include it in the show notes. I really enjoyed that video.

Samantha: Definitely. It was really funny. Yeah.

Drew: And so one of the things—we started out with, and I think it's important—this wasn't a case where I said, "Okay, we're going to do a 60-second video, create the whole video." That was not—I didn't have access to a tool like that, and I wasn't going to pay for a tool like that, because at that moment, there wasn't really anything affordable for us to do. So we decided together that we would do sequences of things. And of course, this being CMO Huddles, there were going to be penguins and penguin animation sequences. So randomly, I met the CEO of Imagine Art. He graciously donated some free credits at the time. I had no idea how far they would go. And I'm going to actually show for a second what the Imagine Art studio looked like, and some examples of some of the videos that it created. And these were created based on the prompts that ChatGPT created for me. So we're jumping over now to Imagine Art and this is—you'll see that there's just a lot of different silly things going on here that'll make sense when you see the script, but you'll notice some goofy things. Like, wait, this penguin has hands. Wait, penguins don't have hands. Oh, this one has owl wings, huh? That's kind of strange. And there are other things that happen that are really kind of goofy, like it'll jump—it'll start with a hat, like this one, it starts with a hat, and then when it's finished, the hat's gone. So anyway, and I want you to see this little, cute little thing that I did with CMO Super Huddle. Because when we get to the finished version, you go, "Oh my god, that was so lame, Drew." But you see each of these were scenes. And one of the things to know that I sort of learned is, you put in your prompt, you get a response, and it may not be good, so you're going to have to do it again, and you burn through credits as you're doing it. But the bottom line is, I was able to create—we thought these were pretty cute, right, Sam? Some of these scenes were actually pretty cute.

Samantha: Yeah, they're adorable. And then that's where it gets really tough in the continuity piece of it, and that's where that traditional expert storytelling needs to still come in, because there are tools out there, like LTX Studio, for example, which soup to nuts—you go into LTX Studio, and LTX Studio, by the way, recently integrated Veo 3, so it has the most powerful video generation platform. But you can do the full storyboarding there and do the transitions and generate a transition just based on a prompt, and things like that. But a lot of people—all the frustrations that you are feeling are the frustrations of the technology. So for example, it's generative—the last part of generative is the biggest part of generative AI. And so with that, that means every single output will be different with the same prompt. You can run that prompt over and over, and there will be guardrails, of course, but it's not going to be the same. The lack of control can be frustrating.

Drew: Yeah, and this really came up at one point, and I actually reached out to folks—I could not figure out how to, even though I rendered through Imagine Art's instructions, I rendered this one penguin that I wanted to have in all of them in various formats, and brought it imported. I couldn't make it work. But I do know that ultimately that was fine for the story. And you kept saying it was fine, and by the way, this is why it's so helpful to have a coach along the way, because I was kind of throwing up my hands. "I can't get the tools to do what I wanted to do." Anyway, it sort of worked out. But do you have platforms right now that for folks that are looking for, again, this is for creating sequences that you can live with, at least. And when it comes to animation, platforms you like to use?

Samantha: Yeah. So there are different platforms for different uses, for sure, and we are still—I always think about it this way—we are still early with this technology. So there is not an easy answer to say when we look at the holiday card that I mentioned, which I'll show you a little bit of for last year, I used three different video generators for it, and there's a reason for that, because Runway ML, which I definitely recommend as one, has this capability to do what I needed to do to meet my creative goal, which is I created an image of myself in Midjourney, and then I wanted to have it speak. And so Runway ML was the one that was able to do that through this capability called Act One. And then I wanted to animate some other images that I created within Midjourney as well. And Veo did the best job. I was able to place one image at the beginning in terms of when you're editing, and another image at the end, and then it'll merge them together. So that's a particular strength within Veo. But when you look at what are the top platforms across video overall? Of course, it's still Sora. Sora is really amazing. Veo 3 now is really amazing, particularly when you want that sound. Runway ML is really good. There are others like Pika that are really good. There are some Chinese ones, like Kling, that people seem to really love as well. And even within those types of video generators, you can get in there and—with something like Kling, let's say your penguin was here, but you want it over there, you can grab it and move it and then regenerate it, and your penguin's over there. So we're getting piece by piece into that greater level of control, but really, really bring it to life like you would have seen. We still have some time.

Drew: I want to make sure that I explained Imagine Art well enough, because I want to thank them publicly for this. They have all the platforms that you just mentioned. They don't have Runway, but they do have Kling, various versions—they have Veo and when you want to do something like take an image and just animate it really quickly, it's amazing. Most of the ones that I created were from words to video, but they will also take an image and animate it. So I think I just wanted to make sure that we covered that, because there are a lot of ways to get to the content that you're looking for for these short sequences. And by the way, I think that's a really good moment to just talk about—you created a little promo video that I love for this event. And I don't know if you can sort of find a way to show that, but it is an example of using video really quickly and using sort of the sync version of talking that you were describing. Can you share that?

Samantha: You know, I would have to go literally into my LinkedIn to pull it up. So I don't know if we want to do that.

Drew: Okay, let's skip that then for a second. Why don't you show a little bit of your video, and go into Kapwing—go into Kapwing, show that video, show some of the things you were talking about, and I'll find the LinkedIn video.

Samantha: Okay, that's perfect, right? So here, what folks should be seeing is the back end of Kapwing. And so when I made my video to really go deep last year for the holidays, what I did is I used that Claude project I mentioned. I finalized my script, my run of show, I decided on my style, the type of music that I wanted, et cetera, and then I basically generated everything, piece by piece. And that's the important piece—you're generating the aspects one-on-one, and then pulling them all together into what's more similar to a traditional type of video editing. This has a bunch of AI features. There are a bunch of other ones, like Opus Clip and others that do very similar things. I know Drew had that. But the difference in this production is you are generating across a bunch of different AI models, pieces that you can then drag into one central place. And so my music came from Suno, for example. Everything that you're going to see in here came from these different platforms that are good. And so if you look over here on the side, here's a bunch of the different things that I had to upload to get into one succinct video. Over on here, you can see you've got visuals, audios. You can do a transcript. You can actually generate new AI voiceovers to be able to add into it. You can add subtitles, those types of things. And it's super simple. I did not—that was literally the first video that I'd ever created was the Holiday Card, which we'll have to link to. It is on YouTube, and I'd never done it before, so I had to learn this soup to nuts. But the good thing is that it's created for people who don't know how to use it. So you can literally drag and drop things. So if I were to just grab this here, I can just take it over, put it into my timeline. See these different layers, and there it is. It starts with that—you can clean up your audio. So I just did it on a regular phone, cleaned my audio using AI to get it to close to studio, and then added in the various different layers. And if I was even missing some of the content here, one of the things that I could be able to do is I could actually just generate filler content. And a lot of these platforms, you can literally do it right there. You can say I need a three-second clip that's going to do X, Y, Z, and you'll get some various different options. And so I can just play a little clip for you here, and you can drop in your own visuals as well, and hopefully everyone will be able to hear it.

Video: The happiest of holidays and a 2025 filled with bold, new adventures and meaningful connections.

Samantha: So all the animation, et cetera, was all done within various different platforms. And then I just dropped my own logo, which, by the way, I created the logo using Canva, which has some really impressive AI features at this point as well.

Drew: Cool. That's awesome. Okay, so I want to fast-forward a couple of things so I can show you what we got to, and then we can get to Steve. So one of the things I did is I recorded the voiceover with this equipment that you see here. I use GarageBand, which is, you know, very capable of high-quality sound. I also use Suno to create my soundtrack for this. I reverse-engineered this, thanks to Sam's instructions, where I had a song in mind. I went on ChatGPT to give me a description of this kind of music, and then I entered that as a prompt into Suno, and it was pretty excited about it. You can barely hear the soundtrack in the demo. Now, for the first track of this thing, I used a tool called Descript. The reason we use this, we already had the license to it. We were using it for a podcast. It's a great tool for podcasts. It's unbelievable at helping us change the script, edit it, create short videos. It's a fantastic tool. What it is not awesome at, at least in my hands, is editing and the fine-tuned level that coupling. But here's the video. This is what I was able to create. Again, all we were trying to do at this point is to figure out: did this video work?

Video: When conditions get harsh, the savviest marketing leaders huddle up. 2024 was flocking awesome. 2025, flocking awesomer. On November sixth and seventh, 101 marketing leaders will flock together in Palo Alto for two unforgettable days of sharing, caring, and daring each other to greatness. B2B marketing has always required courage, curiosity, and creativity. Today, it also takes AI centricity. It's a lot unless you have a strong community behind you. Join the flock. Apply now for the most inspiring CMO gathering of 2025.

Ad Break: This show is brought to you by CMO Huddles, the only marketing community dedicated to B2B greatness, and that donates 1% of revenue to the Global Penguin Society. Why? Well, it turns out that B2B CMOs and penguins have a lot in common. Both are highly curious and remarkable problem solvers. Both prevail in harsh environments by working together with peers, and both are remarkably mediagenic. And just as a group of penguins is called a Huddle, our community of over 300 B2B marketing leaders huddle together to gain confidence, colleagues, and coverage. If you're a B2B CMO, why not dive into CMO Huddles by registering for our free starter program on CMOhuddles.com? Hope to see you in a Huddle soon.

Drew: So I wasn't happy with what we got from that version. It was okay. The script is okay. The sequence, okay. But boy, is this unprofessionally edited. Boy, is that video sequencing not very well done. And at that point, I raised my hands and said, "Let's cancel the podcast. I can't do any better." Sam talked me off the cliff and said, "I want you to meet a friend, Steve Button." And so I think that's a moment where we should bring in Steve and sort of move that conversation to what was his world. So welcome, Steve. How are you, and where are you on this amazing day?

Steve: I'm doing well, Drew. I'm down in Austin, Texas.

Drew: I love it. So give us a quick background on yourself and the company.

Steve: Short story: I've been around B2B marketing forever. I worked at Ogilvy for a while, primarily on IBM, and then ran content marketing and strategic messaging for NetApp. I saw a tool early on called Synthesia, which allows you to create videos like head talking head videos. And I had spent so much money over the years on talking head videos that I was so inspired by Synthesia. I'm like, "I gotta figure this out." And so I jumped into the AI thing. And so we started Talentless AI—that's my company. We've been around, I guess, a couple of years now, and just playing with everything, like every creative AI tool out there, from the video tools, the audio tools, and just trying to stay abreast of it. So we're building our own content. You know, I probably have like 10 or 12 different YouTube channels and TikTok channels that I've stood up just kind of for fun. AI cats—I do a lot of AI cat videos, too many for a grown adult. You know, we're working with some B2B brands and some consumer brands on content, but ultimately, looking at what is the world of—if you want more buzzwords—like agentic storytelling at scale, like, how do you start to use these tools to enhance the overall communications platform. So that's the explanation.

Drew: You've created this amazing series of "Steve Mudd Saves the EU Takeover." Can you show some of those videos, if you don't mind?

Steve: Sure. I'll show this as one example. This is, again, out of Google's Veo 3. And it's like there's been so many iterations of this. Like, this is one of the fastest-moving waves of innovation that I think that we've ever seen, and it's not slowing down. So if we had this conversation next week, we might be talking about totally different tools. But this was created in Veo 3. I had a speaking engagement related to the EU, and so I wanted to do a video about the EU. So this will both frighten and inspire you.

Video: Steve Mudd single-handedly saves the EU with AI and cats. It began as an AI protocol, but sources confirm the cats took it further than anyone expected. We've handed partial control of the AI systems to the cats. Look, I know how that sounds. And in accordance with Article Four, the cats require daily tuna tributes and full veto power over all AI weapon systems. Tonight, the world honors Steve Mudd, a man, a machine, and a coalition of cats that stopped a continent from collapsing.

Drew: I just—I marvel at that, and I just want to ask, what were some of the technical aspects of pulling that together that you can share with the folks, at least in terms of things that—say, if you're not, if you're a little bit new to generative AI, what did it take to pull that together?

Steve: Veo 3 is in some ways the easiest tool out there to use, but also the most complicated to do well. For that particular one, I kind of had an outline of the script in my head, right? And it's worth noting that ChatGPT and Claude—they have every storytelling structure ever built into them, so you can ask for a story to come out of that. So I started with just brainstorming with GPT on what the idea was, and then had GPT map out the prompts to create that video. You know, there were a couple of iterations of it, a little bit of spinning, but honestly, not that much. So, soup to nuts, that might have taken me a half hour.

Drew: Wait, to do the whole video?! Now I'm assuming that this was after you had your script and your scene descriptions.

Steve: That's with the script and the scene, and you can see, right? Like, it's a trade-off, because the AI has imperfections and weirdness. Like, you can see, like, putting words on screen is not a feature of Veo. Like, the words aren't coming out right. Like, if you want a level of consistency, it takes you some time, but if you're willing to have just a little creativity and just let the story flow. So you've heard about vibe coding. This is like vibe videoing, where you can just sit down and sort of put that sort of thing out.

Drew: And did you edit it in? I mean, what did you edit it in?

Steve: Veo has a native editor that's really bad to use. But I just took the clips out of Veo and popped them into Canva. I would say, I am not an editor. Like, I'm a writer by trade. You know, I'm a language person. I was a freelance writer for a number of years. Actually wrote a movie a lot of years ago. So I know, I know like the screenplay format—that's my strength. Like, editing is a whole different skill set. Like, if you need good editors, period, you know, to really get the higher-quality stuff out there. But if you think about these prompts are essentially an extension of what we know about writing screenplays and writing shot lists, right? Like, it's not even the screenplay, because the screenplay is typically a very high level of "here's the story," but it's converting that into a shot list that these generators can sort of adapt to as well, and GPT can help get you there. It just, you know, sometimes will take a little prompting to make sure that you get that consistency and things out of it. And that was easy, right? Because it was a—you know, there wasn't a consistent character in each scene. You know, I wasn't trying—I didn't do anything with the sound design. It was, it just was what it was, which was just a short, fun clip that was intended for, you know, that one-time use.

Drew: Okay, so let's talk about—let's get back to the project at hand. I mean, we sort of came to you with this video. What were some of the things that you noticed right away that you thought, "Well, yeah, maybe we could probably help with this"?

Steve: Well, again, it's always, you know, starting with that structure in mind for what is the flow of the penguin lifestyle? What is the dramatic arc that we want the penguins to follow in this process? And then there's an aspect of it on my side where it's like, okay, well, first we have to make sure we get a consistent penguin, right? We wanted the penguin with the tie and all of that. We wanted it in some different scenarios, like the vision that you had on paper as a script wasn't fully reflected in what you had prompted, so it was going back to your original intent and sort of mapping out, how do you take that original intent and put that into a shot list that actually executes against what you want?

Drew: Yeah, it was just such a funny conversation, because it was like, okay, at least for me, it was like a moment of relief with this handoff where professionals were going to take over, and before we premiere the actual video, is there anything else we should know about the production process beforehand, or do we show it and then we go and do it afterwards?

Steve: No, yeah, I would say for this. So we used again, took some iteration, one tool that hasn't been mentioned yet, which always deserves a shout out in this whole process, is Midjourney. You know, Midjourney is still far and away the most artistic visual image generator. Midjourney has also now introduced its own video generator, and it's gorgeous, like the stuff you can get out of that is just beautiful. So my workflow now starts in Midjourney for almost everything that we do, unless you need it to talk, because Midjourney can't talk, and that's where, like, yours was like, "Okay, I need this for that, and this for that, and this for that," you know, because we didn't worry as much about audio, you know, we were able to sort of extract that from the creative process, and just make sure that we get the video right with the audio over the top. But yeah, I'll pause at that.

Drew: Okay, all right. Well, we are going to show the video. Here we go.

Video: When conditions get harsh, the savviest marketing leaders huddle up. 2024 was flocking awesome. 2025 is gonna be flocking awesomer. On November 6th and 7th, 101 marketing leaders will flock together in Palo Alto for two unforgettable days of sharing, caring, and daring each other to greatness. B2B marketing has always required courage, curiosity, and creativity. Today it also takes AI centricity. It's a lot unless you have a strong community behind you. Please join the flock. Apply now for the most inspiring CMO gathering of 2025.

Drew: Okay. Audience, how'd we do? Hands up if you think it got a little bit better? We got some, I see a couple. Yes, it got a little bit better. And hopefully more of you are interested in attending now. But so there's some cool things that you did here, and I'm wondering where the Midjourney was? Like, did you use Midjourney to create that ice sequence at the end?

Steve: Yeah. So that's a great example. So with, so what we did with that ice sequence is that we were able to take your logo, the official CMO Huddle logo, and there's a re-texturing feature in Midjourney. And so we asked it to re-texture it as a block of ice. And then the first iterations came out fine. And then I'm like, "Oh, wait, I forgot the penguin needs, he needs his hat and his bow tie." So we were able to go in and say, you know, it's a hat and a bow tie. And so then, once we had the image then created, we were able to, you know, to put that in and basically prompt the penguin breaks out of the ice to get to that point.

Drew: And then we had the exploding ice, all right, well, let's bring Sam back and talk about all of the implications of all of this we've discussed, and that this is the worst that generative AI video will ever be. So how much better do you expect this stuff to get, and how soon will we get there?

Samantha: It's interesting. I think we will definitely have capabilities. We already have capabilities where people can't tell the difference. We actually do know that if you're using the right video tool in the right sequence, etc., like people cannot tell the difference, and in most circumstances, so and it's just improving at such a rate that I've never seen any technology improve at the rate like this in my career, for sure. And so right now, I would say, like the what people need to be doing is starting to integrate it already into the workflows. It can be at what it's working well for you. That could be a transition or, like footage within a current project that you already have, that could maybe be a wow moment within project that you already have that only AI can do, but it's just going to get better. So I would not, I definitely would not wait. I don't know about your thoughts, Steve.

Steve: I mean, and we've seen we just did a hybrid project for a client where they had an internal sales kickoff meeting that they wanted to turn their executives into Avengers, so that we were able to use these AI technologies to turn them into Avengers, right? So we had one of their leaders, we turned into the Hulk, Captain America. And to do it in a sequence that looks big, right? I mean, these are tools that can make little brands look really, really big, and they can sort of add that element. So I think that hybrid human AI production is you're going to see a lot of Hollywood is now, I would say, officially awake to this. There have been four or five different studios similar to ours who've gotten venture funding. You know, Runway has a deal with Lions Gate right now. So this will be the future of creativity. And the nice thing is, it is democratized in a lot of ways. Like, stock footage, to me looks so bad now, right? Like, it's almost like it raises the expectations on what you have to create, that what you create has to be more relevant to your audience, has to be a little more interesting, a little more fun. You know, in case the expectations weren't already high enough, they may be a little bit higher now, because that bar to true creativity is lower.

Samantha: Yeah, and actually that makes me think about what you brought up earlier, which is, I took the static image from just how what we used to promote data like for the shows, and I animated myself and Steve within the static image having a conversation. No, it's not our voices, but it's a fun conversation. Looks incredibly realistic. And then if I put that into another tool, I could use something like ElevenLabs and actually make it our voices.

Drew: And let me show that, it's super cute, and I got a big kick out of it. And the medium and the message really came together. I'm going to put this full screen. I hope you can see full screen. Wow. And then let's hit play. Come on.

Video: Hey, Steve, you excited for the CMO Huddles podcast? You know it, Sam, it's going to be flocking awesome.

Drew: We just had a static image. Suddenly, it's not a static image. Suddenly, it's something different. And that really caught my attention, the medium and the message came together beautifully there. The thing I'm hoping that you all will be inspired about is static images at this point, it's so easy to animate and it's so easy to create this that you really need to be thinking about video. Can you just add a little something there? And some of you may have seen on my Saturday LinkedIn rants. You know, I have a movie, a video every week for it, those take all of about two minutes to create, create the image in ChatGPT DALL-E, take it over to imagine art, and then turn it into a GIF on Easy GIF. I mean, it's literally like that, and I'm doing it now. The difference between that and what Steve and his team did for the 60-second video, and this is where this professionalism starts to matter. There was better editing, there was better sequencing. They created more they understood pace and flow much better than I ever could or have the patience to do. So I'm curious, from your standpoint, Sam. And the video production used to be super expensive and time consuming. What kind of let's say, for a 60-second video, a 60-second video like this, I mean, what kind of reductions, and let's say you were going to outsource this to professionals, should they expect in terms of time and cost?

Samantha: Yeah. So with this one, specifically, I can tell you it took me under 10 minutes of concept into running it a few different times to be able to get to something that I thought that would work. It's almost hard to say. It depends on your ambitions. From that perspective, it depends upon how sophisticated you want it to be. As I've mentioned, I've that commercial, which I personally think is a good commercial that ran in the NBA, that I know cost them $2,000 so you can just imagine the cost savings of that. I would expect most projects, you would have a savings of 30 to 60% at least. But again, that just goes down into the various pieces of concept. But like many, actually, AI workflows, what you want to do is, you want to take a look. All right, what is my current workflow that I have now for video generation? What are my goals? All right? Now, how can I plug in and make it better? So instead of filming that scene like I know that, instead of rolling a production for the filler video, I'm gonna get a much better, actually, same level quality, if I just do some generations for that piece of it, specifically and honestly, as you saw with Steve and some of the work that he's done, digital avatars are getting shockingly good, and you can create these clones really quickly now too. And so I know there are YouTubers with massive audiences. It's their digital avatar, and it's going to take you, you know, it's going to take you several minutes to figure out at all so that that person is an avatar. So if you're doing, again, short form like that's just think about the savings or something like that.

Steve: Again, another platform we haven't really talked about—you know, I mentioned Synthesia earlier—but I've kind of gone all in on a platform called HeyGen. HeyGen was focused initially on lip sync. You know, there were two Chinese students in the US who wanted to solve the problem of American movies being dubbed into Chinese. So you can actually take any video that you shoot and put it into HeyGen, and it will translate it into any language you want, and it will look like the speaker is speaking that language natively. That's like the lowest hanging fruit of a use case, especially in B2B for global organizations. Like, if you want a video in Chinese, pop it in there. All of a sudden you have a video in Chinese. And I've created probably—it's a little obsessive—but like 500 versions of AI Steve. He's better looking, he's got better hair. He wears costumes. So when I do video content, I'm obviously—now I've got, you know, I've got my selfie light. I have my vanity thing. I'm like everybody gets so wrapped up in doing a video. Now what I'll do for my personal content is I'll record the audio and put it through one of my avatars, because I know that avatar is going to look better than me, and I can edit my audio in a way that gives me a better performance. And so that's a huge time savings and performance anxiety savings, you know, so clone executives.

Drew: You know that there's a lot of folks in our community who have gotten very used to creating all their own written content, all their own static images and so forth. When in your mind is it time to call in the professionals? And what kind of level project, and when would you want to be called in? Because, I mean, there are certain things that folks should just learn how to do and do it on their own.

Steve: It's a great question. I look at one of my favorite clients right now—all my clients are my favorites—working with an organization called Future Keepers. And Future Keepers is essentially trying to tell the story of the global south and the energy transition around solar. We have a small team using AI tools where we're creating five to ten videos a day in six or seven different languages and putting that out there. And the last newsletter that we did for Future Keepers—it was interesting. I had a Midjourney animated header in there. We had an interactive infographic from Gemini. That's like a whole new thing that nobody's talked about. I just found it—like an interactive infographic. We had a video, you know, we had a Vail video in there. We had a HeyGen video in there. And I'm like, "Holy crap, this is all of a sudden—we've had like the most technically sophisticated newsletter in the history of newsletters." But, you know, what is that ultimate goal? Or is there a level of creativity that you want to achieve? I would call in the professionals when you want to start thinking about quality and scale. And how do you build systematic series out of this? You know, I look at ElevenLabs as great. There's no reason at all why organizations shouldn't be using ElevenLabs to put out a weekly podcast. You know, Perplexity is doing a daily podcast using AI voices. The voice synthesis is so good, so just hire a professional or have your team do it—it's as easy as popping a PDF into ElevenLabs, and it will give you a podcast. And so all of a sudden you have another low-cost content type out there that can fit into your overall content strategy.

Samantha: Yeah, actually I want to add to that, because I think it makes a very important point. Six months ago, we would have never said that. Two months ago, we might not have said that. But now with AI voice specifically, it's hitting this different level, which almost—it's like an emotional intelligence. Essentially, most people cannot tell the difference between talking to a human and talking with these more recent ElevenLabs, being one of them, having this higher level of emotional intelligence. And so these podcasts are engaging and funny and make these connections that are very unique, whereas three months ago, I probably would have said, "No, that's not necessarily what you want to do." But that is the pace—that is the rate of where we are. And next week, who knows?

Drew: Yeah. I mean, I played with the NotebookLM, and, you know, just blown away by the podcast that they can create out of just anything. The better the content that you give them, the better it is. Funny enough, we did have a question about—let's say you have a large amount of video and raw footage and you need to edit it down to a minute or two. And I guess the question—the question I have for the questioner is, are you hoping that the AI will pick the video for you, or are you just looking to edit it yourself? And maybe I'll ask that question: Are there tools right now that would pick the video for you?

Samantha: Yeah, there are. So the one that I showed on the back end of Kapwing—they have that functionality—but others do as well. I want to say OpusClip does as well. Essentially, you take a large piece of footage, you upload it, and then it's going to generate for you multiple different options. And it's using AI to figure out, "These are the most compelling moments in your 60-minute video," and we've spliced them together into this format and added some music behind it, as well as the text that you can then shift and change and edit a little bit. But there are multiple tools that do that for sure.

Drew: Yeah, I'm going to mention Vidyo.ai. They're a partner of CMO Huddles, and they can take long videos, find the best clips, edit them together.

Steve: Descript is such a great example, too. And if you want to get a sense of the future of product design and interfaces, Descript is AI-forward, I think, in how they approach that. You just go on Descript and be like, "I want to do this." You know, you don't have to click on the dropdown menu. It just has that natural language interface—that's a great tool. Eddify AI is another weird one out there. They're designing themselves essentially for those use cases where you might have 20 hours of footage, so you're building a documentary or something. You could drop all 20 hours in there, and it'll build a documentary for you. I haven't had the right use case for that. So if anybody has 20 hours of video they want to test...

Drew: Send it your way in a giant hard drive? You know, I have to say, though, part of this experience for me was just how exciting it is. And I also encourage every CMO to sort of get into something and play with it a little bit, because it really will open your eyes. So when you're communicating with other folks, you have a sense of at least somewhat the art of the possible. What are you guys—what are you two personally most excited about right now when you're looking at the world of generative AI?

Steve: Yeah, I mean, to me, it's the beginning of a creative renaissance, right? And you're seeing this initial phase of, "Oh, it's slop, and it's weird," and it's like, yes, all of that is true. But this is also perhaps the most interesting and complicated art form we've ever created as humans. To do these well—to do these artistically—which we haven't seen a lot of yet. Mostly it's been like car explosions and robots and trailers—those are great. But to get to the point where we're using this to evoke human emotion and real human storytelling is going to take a level of artistry that very few people are going to be able to do. So as those artists emerge, I'm thrilled to see it, because you have to—again, you have to understand storytelling and lighting and story and character and performance, right? You're a one-man band. You can be a one-person band in creating a movie. And that blows my mind, and that's what I'm interested to see—what do the next five years look like as the real creators of this, who may not even know how to use the tools today, but as those creators get involved and start using them, what's possible? It's a lot more interesting than influencers.

Drew: The Orson Welles of yesteryear is going to come out and figure out, "Oh, I can be the writer, director, designer, production, cameraman, all of it. I just need to have the great story in my head and get it out." Sam?

Samantha: Exactly. I agree with everything that Steve said, and I'm excited about all those things. The things that I would add to that is that even where we are now—with Tribeca Film Festival, they had a short film, all AI-created short film, as part of the competition this year. So that shows you—actually go and check out, even if you just go to Sora and check out what regular people are doing every single day, that will give you a sense of like, "Oh my gosh." And same thing with video—there's channels there where you can almost watch it like you watch TV, to get that understanding. But what I love—and the reason that I fell in love with this form of technology and just generative art overall—is exactly what Steve said, which is anyone can now create something pretty incredible. And so what I would say to that is, then, let's stop looking at trying to recreate what we made with the regular tools now in this new form of art. Let's create something completely different in terms of storytelling, and that goes for the rest of content marketing. Because the bad news with the good news of the democratization—the bad news is your content has to be really great. Because, you know what, it's getting really easy to create really great content. So the bar goes up, and then the bar goes up, and then the bar goes up. So you do have to really lean into that. So it's exciting, but also a lot of pressure. Frankly, you know, a lot of pressure.

Drew: Yes, and don't hesitate to hire a coach like Sam or a professional like Steve, just to get to the level that you know you're used to when you used to do professional things and paid people to do this stuff. It makes a big difference working with you. So Sam, where can people find you?

Samantha: So my email is actually sam@phyusion.com. Definitely feel free to hit me up and send me an email. You can also contact me through LinkedIn as well.

Drew: And Steve, where can people find you?

Steve: I'm also on LinkedIn. Steve Mudd, steve.mudd@talentless.ai. You can go find me on TikTok if you're really bored, or if you want to see some weird stuff, find our YouTube channel. Just look for Talentless AI. I'm putting out weird stuff every day. I just—I have to create. I have to put it out there.

Drew: So I love it. I have gotten such a treat working with both of you. I'm actually a little sad that we're coming to an end. So thank you both for your awesome talent, guidance, and hard work. We all really appreciate you. You know, barely can acknowledge worthiness to having worked with you. So thank you.

If you're a B2B CMO and you want to hear more conversations like this one, find out if you qualify to join our community of sharing, caring, and daring CMOs at cmohuddles.com.

Show Credits

Renegade Marketers Unite is written and directed by Drew Neisser. Hey, that's me! This show is produced by Melissa Caffrey, Laura Parkyn, and Ishar Cuevas. The music is by the amazing Burns Twins and the intro Voice Over is Linda Cornelius. To find the transcripts of all episodes, suggest future guests, or learn more about B2B branding, CMO Huddles, or my CMO coaching service, check out renegade.com. I'm your host, Drew Neisser. And until next time, keep those Renegade thinking caps on and strong!